Model guardrails alone can’t keep AI systems safe. This is something our research team sees every month when testing frontier models (results published on our AI Security Leaderboards). While model guardrails are useful for setting behavioral boundaries, attackers are persistent. With the constant barrage of prompt injections, jailbreak attempts and novel exploits, organizations are left exposed to data leaks, compliance failures, and adversarial manipulations that guardrails weren’t designed to stop. To truly secure AI applications and agents, organizations need runtime guardrails that defend in real-time by detecting and blocking threats as they happen.

Why Enterprises Can’t Rely on Model Guardrails Alone

AI model guardrails are preventive tools, typically built into training, reinforcement learning, or prompt rules. They shape the intended behavior of AI systems, restricting what models should and shouldn’t do. But once a model is in production, real-world usage exposes its limits through things like:

- Attackers inventing new prompts and exploits that sidestep guardrail restrictions

- Sensitive information, such as PII or source code leakings even when outputs appear safe

- Slow adaptation, with model guardrails often requiring retraining or reconfiguration before they can handle new threats

In other words, model guardrails serve as a guide, but they don’t guarantee security.

Runtime Guardrails Catch What Model Guardrails Miss

F5 AI Guardrails fill the gap model guardrails leave behind. Operating at runtime, runtime guardrails inspect both prompts and outputs in real time, blocking or flagging risky interactions before issues arise. For example, prompt injection and jailbreak guardrails can detect manipulative prompts that attempt to override policies. Similarly, data loss prevention guardrails stop sensitive data from leaving the organization.

Runtime guardrails can operate in a block mode (preventing violations) or audit mode (logging events for oversight). Unlike model guardrails, these runtime guardrails are continuously updated, ensuring defenses keep pace with evolving attacks.

To put it simply, model guardrails are like the fences that define where people can go around a building. Runtime guardrails are the security checkpoints at the entrance—inspecting everything that crosses the threshold. One sets the boundaries; the other ensures nothing harmful slips through.

Key Differences

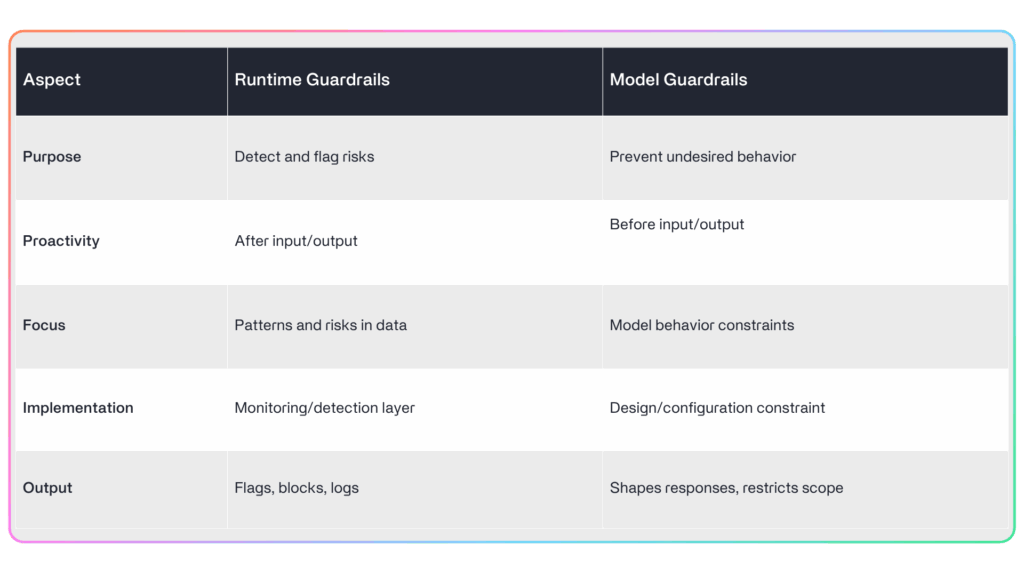

While both runtime guardrails and model guardrails contribute to AI security, they operate in fundamentally different ways. This table outlines the distinctions at a glance.

This contrast makes one thing clear: model guardrails help guide AI, but runtime guardrails are what keep it truly secure in production.

Why Enterprises Need Runtime Guardrails to Secure AI

While runtime guardrails provide the stronger layers of protection at inference, their real value comes when paired with model guardrails, working together to create a defense-in-depth strategy.

Model guardrails establish the baseline boundaries for model behavior. Runtime guardrails extend that protection, catching novel threats and circumvention attempts that model guardrails can’t anticipate. For example, a model guardrail might prohibit a model from giving medical advice, while a runtime guardrail detects and blocks attempts to sidestep that rule through indirect phrasing or manipulative prompts.

This layered approach offers the strongest posture for AI security, enabling enterprises to innovate with confidence while staying resilient against ever-evolving risks.